Dig out your old grammar workbooks. Got them? OK, now throw them away. That’s right — we’re giving you permission to break some of those old-school rules. The English language is constantly evolving, meaning rules that were once drilled into your head by schoolteachers are now more like guidelines, and sometimes it’s OK to ignore them. Here are four grammar rules you no longer need to stress about. (We’re breaking one of the rules right away.)

1. Don’t end sentences with prepositions

“You don’t know with whom you’re messing!” is probably not something you’d hear during a heated argument — it doesn’t quite roll off the tongue. Chopping and restructuring prepositional phrases was probably one of those lessons touted by your seventh grade English teacher, but the need for such a rule is questionable at best. Avoiding a preposition at the end makes things wordy, doesn’t do anything to further clarify the meaning, and can make the speaker sound awkwardly pretentious.

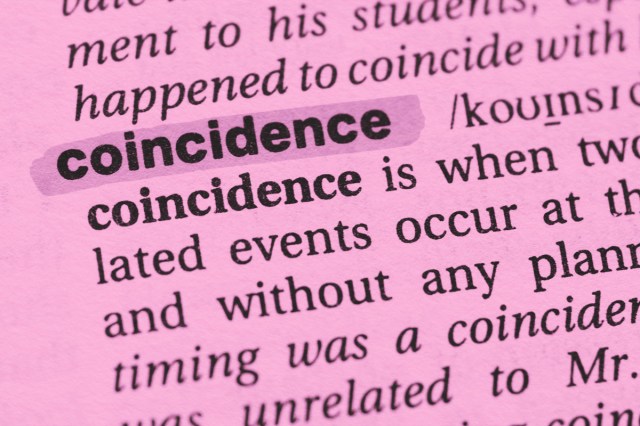

Merriam-Webster argues in favor of ditching this rule, too, claiming that it was made up by grammarians trying to force English to fit Latin rules. Seventeenth-century linguists argued that because a preposition can’t be stranded in Latin, the same should be true for English. But Latin departs from English in myriad ways, the least of which is the idea of stranded prepositions. By the 20th century, almost all style and usage guides had given up any argument against the terminal preposition, so there’s no reason to twist your wording into awkward constructions.

The one exception to this rule abandonment concerns unnecessary tag-ons of prepositions. This means adding prepositions at the end of a sentence when you don’t need to. For example, “Where is this bus going to?” can easily be streamlined to “Where is this bus going?” Fewer words make a more concise sentence.

2. Don’t split infinitives

“To go boldly where no one has gone before” just doesn’t have the same ring as Captain Picard’s tagline “To boldly go where no one has gone before.” While it is true that the adverb “boldly” is modifying the infinitive “to go,” placing the adverb before the verb gives emphasis to the special intent of the verb before the listener hears it. Trekkies know that something bold is about to happen.

The rule of not splitting infinitives is yet another carryover from Latin. Latin infinitives are a single word, indicating to some linguists that English infinitives should be treated as a single unit. But again, English is not Latin. Split infinitives have been used by some of English’s best writers, including Benjamin Franklin, William Wordsworth, Samuel Johnson, and George Bernard Shaw, so why not you?

We’ll add a caveat here that we’re not recommending all infinitives should be split; we’re simply saying that it’s not a grammatical crime. Leaving the infinitive intact is preferable in most cases, especially in formal or academic writing. But in cases of creative writing, or when you’re looking for a certain style or emphasis, don’t let the rule hold you back.

3. Never begin a sentence with a conjunction

“But since writing is communication, clarity can only be a virtue. And although there is no substitute for merit in writing, clarity comes closest to being one,” William Strunk Jr. & E. B. White wrote in The Elements of Style.

Beginning a sentence with a conjunction has long been considered a grave grammatical sin. But doing so helps to keep thoughts separated and will save you from a confusing cacophony of commas, not to mention allow your reader to breathe between thoughts. Conjunctions, sometimes recognized by using the mnemonic FANBOYS (“for,” “and,” “nor,” “but,” “or,” “yet,” and “so”) but more accurately by Merriam-Webster’s mnemonic WWWFLASHYBONNBAN (“whether,” “well,” “why,” “for,” “likewise,” “and,” “so,” “however,” “yet,” “but,” “or,” “nor,” “now,” “because,” “also,” and “nevertheless”), have been used to start sentences for over a millennium.

The Bible, for example, uses conjunction-led sentences generously:

In the beginning God created the heaven and the earth. And the earth was without form, and void; and darkness was upon the face of the deep. And the Spirit of God moved upon the face of the waters. And God said, ‘Let there be light,’ and there was light.

As further evidence, the AP Stylebook and the Chicago Manual of Style both permit the use of conjunctions to start sentences.

4. Never start a sentence with “hopefully”

“Hopefully, the taxi will arrive soon.” “Hopefully” has been unfairly singled out by grammarians as the adverb you should never use to start a sentence. According to the rule, the aforementioned example sentence means that the taxi is acting in a hopeful manner, something that is impossible for an inanimate object. Instead, placing the speaker as the hopeful one would turn it into “It is hoped that the taxi will arrive soon” or “I am hopeful that the taxi will arrive soon.”

But those wordings are unnecessarily awkward and formal, and English can bend for the sake of conversation. Besides, rarely do grammarians take issue with other adverbs such as “clearly,” “unbelievably,” or “fortunately” modifying the following sentence.

Hopefully, you’re able to concisely write to someone without worrying about unnecessary grammar rules. But if they can’t appreciate your interpretation of the English language, find new friends to share your writing with.